On Generalizability of 'Competition of Mechanisms' 🍎

On Generalizability of 'Competition of Mechanisms' 🍎

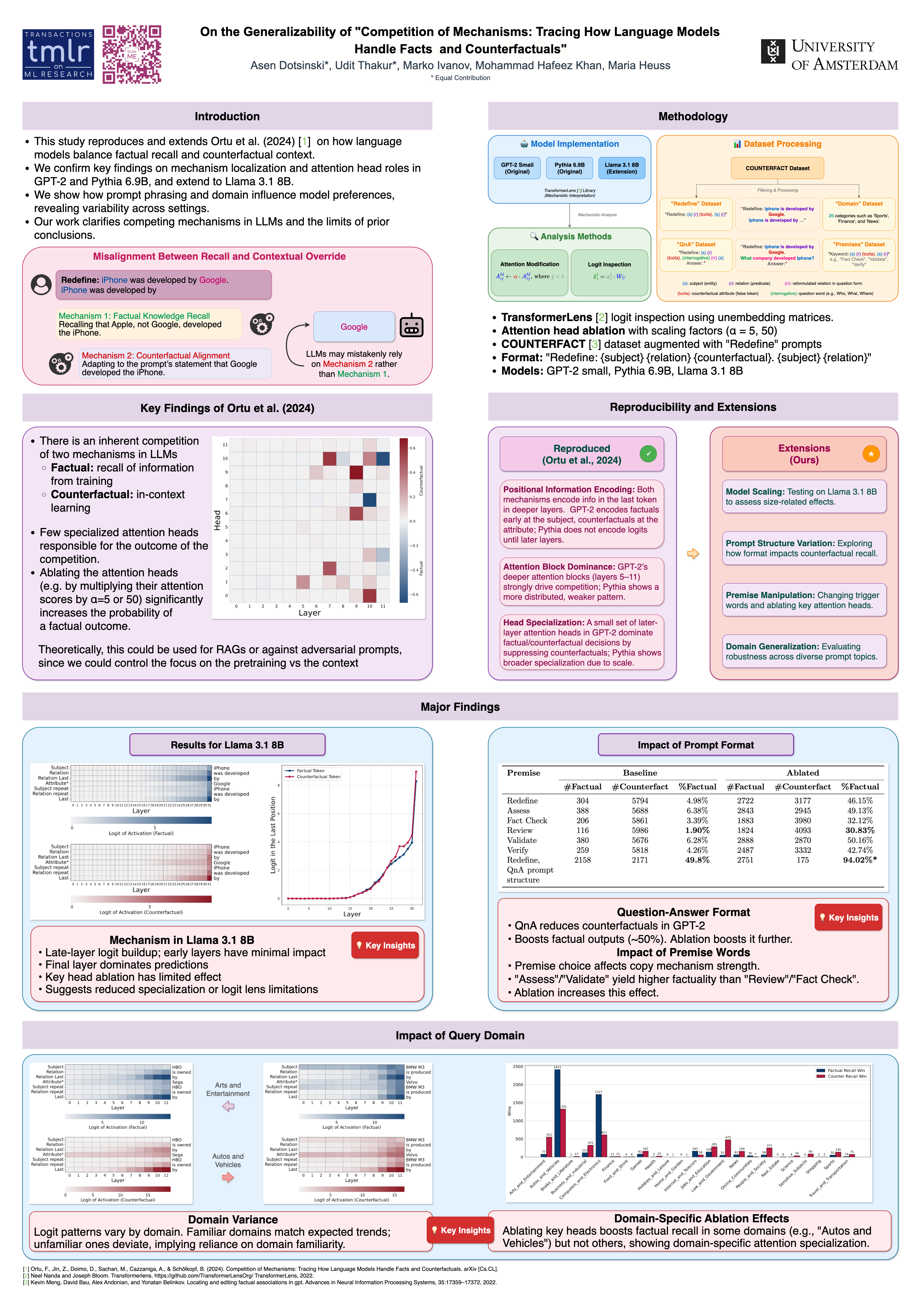

This project reproduces and extends the findings of “Competition of Mechanisms: Tracing How Language Models Handle Facts and Counterfactuals” [1]. We validate the original study’s results on GPT-2 and Pythia 6.9B, confirming the role of attention mechanisms in resolving factual and counterfactual conflicts. Our extensions include testing larger models (Llama 3.1 8B), varying prompt structures, and evaluating domain-specific prompts. These reveal key limitations in attention head specialization and ablation strategies, highlighting dependencies on model size, architecture, and input design.

Code: link

1

2

3

4

5

6

7

8

9

@misc{dotsinski2025generalizabilitycompetitionmechanismstracing,

title={On the Generalizability of "Competition of Mechanisms: Tracing How Language Models Handle Facts and Counterfactuals"},

author={Asen Dotsinski and Udit Thakur and Marko Ivanov and Mohammad Hafeez Khan and Maria Heuss},

year={2025},

eprint={2506.22977},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2506.22977},

}

References

- [1] F. Ortu, Z. Jin, D. Doimo, M. Sachan, A. Cazzaniga, and B. Schölkopf, “Competition of Mechanisms: Tracing How Language Models Handle Facts and Counterfactuals,” in Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, Aug. 2024, pp. 8420–8436, doi: 10.18653/v1/2024.acl-long.458.

This post is licensed under CC BY 4.0 by the author.